Return

General

June 27, 2025

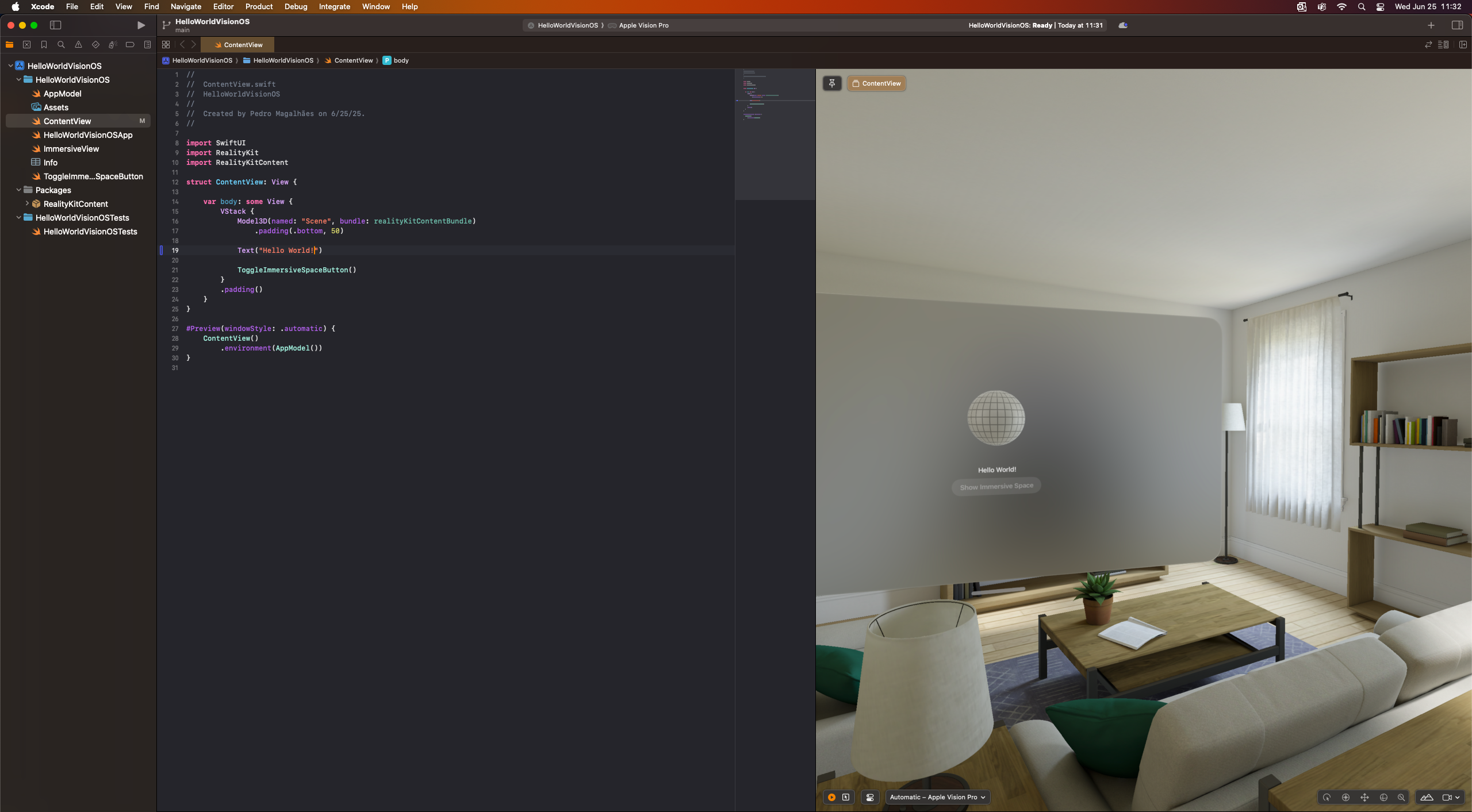

Building for Apple Vision Pro: Part 1

Pedro Cisdeli

Static detection, live-camera hurdles, and the APIs we can already use

This post kicks off a short series documenting my hands-on journey building ag-tech tools for Apple Vision Pro (AVP).

1. Static image / video object detection

Goal achieved, with a few twists.

- Core ML export needs built-in NMS. Converting my YOLO v11 model required:

WithoutPython

model.export(format="coreml", nms=True, task="detect")nms=True, Vision returns raw tensors instead of neatVNRecognizedObjectObservationresults. - Segmentation quirks: YOLO-Seg exports run, but Vision treats their mask output as a generic multi-array, no automatic drawing or post-processing. In the near future, I will make my code for interpreting the segmentation model output available.

- Outcome: With a detection-only model and the standard

confidence+coordinatesoutputs, AVP happily runs inference on still photos and saved videos.

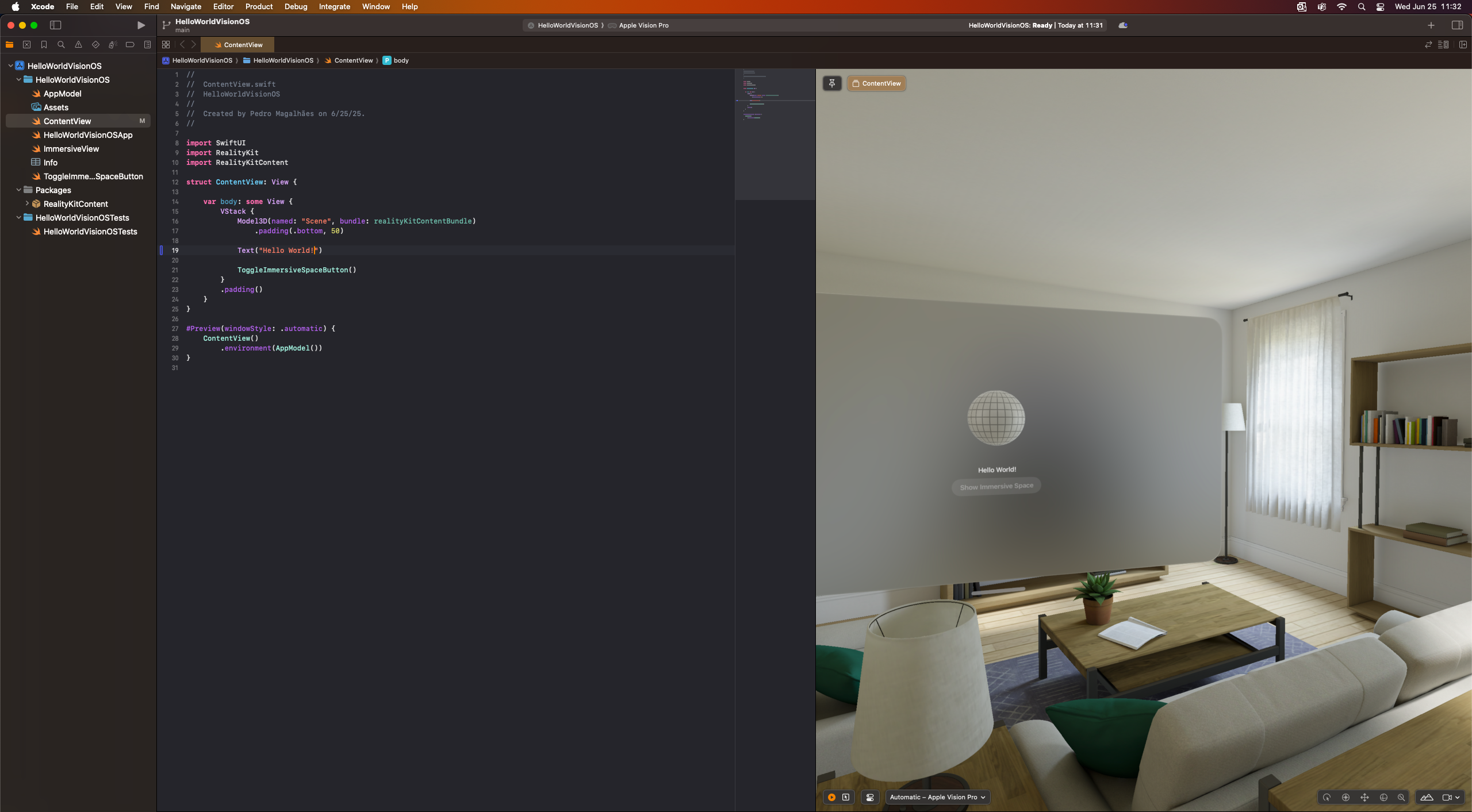

Static Image Detection on AVP Simulator via MLCore and Swift (Image credits: Ultralytics)

2. Live camera feed: the gatekeeper

- Raw passthrough access is enterprise-only (visionOS 2+). Public-store apps can't touch the main cameras for privacy reasons; access is behind the entitlement

com.apple.developer.arkit.main-camera-access.allow. - We are working on obtaining the necessary entitlement. In the meantime, I'm focusing on what's already possible with the publicly available ARKit stack-which offers a lot of capabilities for ag-tech applications, as detailed in topic #3 below.

3. What's already possible with the open ARKit stack

Even without live camera frames, AVP offers built-in functionalities for spatial data that can power practical ag-research tools:

| Capability | API | Ag use-cases |

|---|---|---|

| Mesh reconstruction | SceneReconstructionProvider | Digital plant phenotyping and data collection for digital twins by analysing the 3D mesh. |

| Plane & image anchors | ARPlaneAnchor, ARImageAnchor | Tag field sensors or seed bags with QR/ArUco markers and pop up live metadata when viewed. |

| LiDAR depth on still photos | Photo-capture sheet + AVDepthData | More digital phenotyping without destructive sampling (check out Cisdeli et al., 2025). |

| Photogrammetry (Object Capture) | ObjectCaptureSession | Generate watertight meshes of cobs, leaves, or plants for later analysis. |

| External video ingestion | AVSampleBufferDisplayLayer, WebRTC/NDI | Stream a drone or tractor camera feed into AVP, run Core ML locally, and overlay detections. |

Next up in the series

- Implementing the functionalities discussed in topic #3.

- Testing the live camera feed once we get the entitlement.

Stay tuned!